2026

ICPC 2026

ICPC 2026

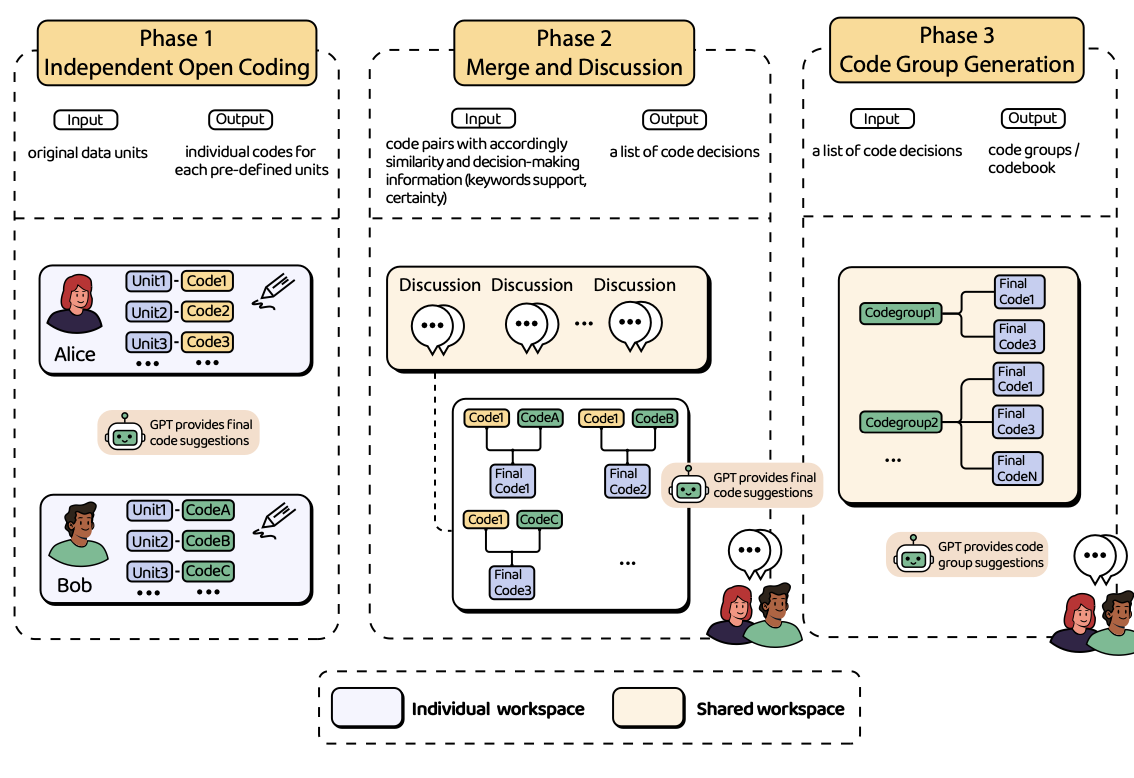

CHI 2024

CHI 2024

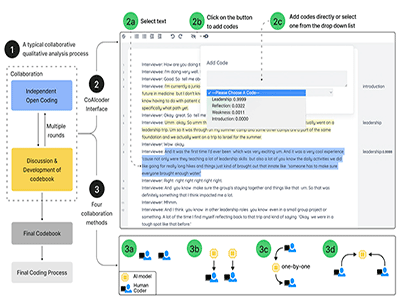

TOCHI 2023

TOCHI 2023

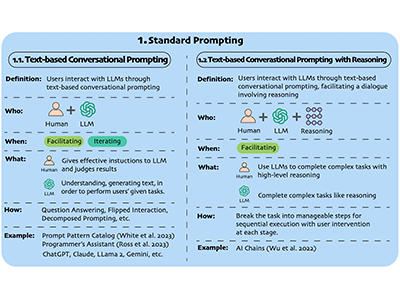

CHI EA 2024

CHI EA 2024

2025

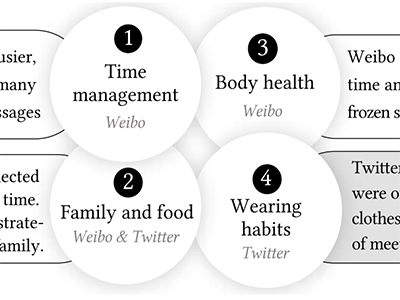

CHI EA 2022

CHI EA 2022

The 4th HCI+NLP Workshop at EMNLP2025

The 4th HCI+NLP Workshop at EMNLP2025

Workshop Proposal

Workshop Proposal

ICPC 2026

ICPC 2026

CHI 2024

CHI 2024

TOCHI 2023

TOCHI 2023

CHI EA 2024

CHI EA 2024

CHI EA 2022

CHI EA 2022

The 4th HCI+NLP Workshop at EMNLP2025

The 4th HCI+NLP Workshop at EMNLP2025

Workshop Proposal

Workshop Proposal